Updated on by Hayley Brown

Unlocking Deep Data Ingestion with Cyclr: A Practical Guide for AI-Driven SaaS

As SaaS products increasingly rely on real-time insights, personalised user experiences, and AI-driven automation, the need for deep, continuous data ingestion has never been greater. Yet integrating and orchestrating high-frequency, high-volume data across diverse platforms remains a technical and architectural challenge.

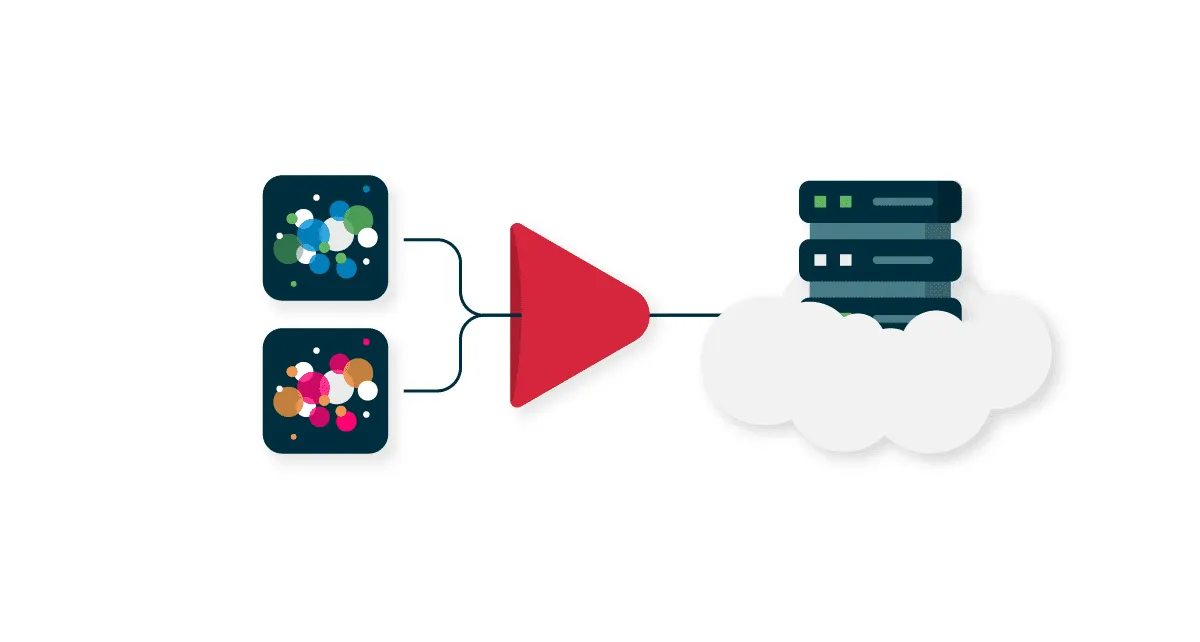

That’s where Cyclr, an embedded iPaaS (Integration Platform as a Service), comes into play. While Cyclr is traditionally known for powering SaaS integrations and workflow automation, it can also be strategically configured to enable deep data ingestion. As a result, fuelling everything from semantic search in vector databases like Pinecone to live customer analytics and AI model pipelines.

In this post, we’ll explore how to architect deep data ingestion workflows using Cyclr, including real-world use cases, best practices, and tips to overcome common limitations. Whether you’re building an AI-powered support system, syncing high-frequency event data, or enhancing your product with real-time personalisation, this guide will help you unlock the full potential of Cyclr in your data pipeline strategy.

What is deep data ingestion?

Firstly, deep data ingestion is the process of pulling in data from multiple sources, whilst maintaining a high level of quality and insight, into a single database for storage, processing and analysis. This database is accessible, consistent and can be queried to deliver insightful and actionable data to end users.

Who uses deep data ingestion?

Deep data ingestion is used by organisations that deal with large-scale, high-velocity, and diverse data sources, especially when they need real-time or near-real-time processing for AI, analytics, monitoring, or personalisation. It’s commonly used in data-intensive environments that require real-time analytics, complex transformations, and scalable architectures.

One example is tech and SaaS companies, they use deep data ingestion to gather user actions, logs, and metrics across distributed systems for analytics, personalisation, observability, and ML (Machine Learning). Another example is eCommerce and retail, they use deep data ingestion to gather behavioural data, inventory updates, and transaction logs to support AI-driven recommendations, supply chain optimisation, and demand forecasting.

How to achieve deep data ingestion using Cyclr?

To achieve deep data ingestion using Cyclr you’ll need to leverage its capabilities to connect, automate, and route high-volume, high-frequency data across systems. While Cyclr is typically used for workflow automation and third-party SaaS integrations, you can architect it for deep data ingestion with thoughtful design.

Cyclr is best suited for:

- Trigger-based and scheduled ingestion

- ETL-like workflows across APIs and databases

- Orchestrating enrichment, transformation, and routing

- Embedding custom logic into SaaS products

Source Connectors

The key components of deep data ingestion with Cyclr is first the Source Connectors. These are Cyclr’s pre-built Connector to ingest data from SaaS applications like Salesforce, HubSpot and Shopify. There is a library of over 600 to work with. Databases like MySQL and Postgres. Webhooks for streaming-style or event-drive ingestion and finally, FTP/S3 for batch data ingestion. These support structured and semi-structured data via APIs and file ingestion.

Real-Time or Scheduled Workflows

Second is the real-time or scheduled workflows, Cyclr allows you to choose between webhooks for real-time data ingestion from apps that support pushing events. Alternatively, polling or scheduled tasks, designed to fetch data every x amount of time (minutes/hours) from apps with APIs but no webhook support.

In-Built Tools

Next, use Cyclr’s in-built tools for transformation and enrichment. This includes cleaning and normalising data, for instance date formats and string casing. As well as adding metadata like ingestion timestamp and source tag. Finally, the tools can split or aggregate payloads before forwarding. Cyclr also allows users to use JavaScript snippets or conditional logic for more complex enrichment.

Destination Connectors

Finally, utilise Cyclr’s destination connectors, these allow you to push data to Internal databases or data lakes via SQL, S3, Azure Blob. They also include event hubs such as Kafka, Pub/Sub acheived via webhook proxies. As well as vector databases like Pinecone which can be achieved via custom connector or an API call step.

How to query and deliver ingested data using Cyclr

Let’s dive into an example use case, in this instance we’ll look at a deep data ingestion pipeline with Cyclr. The goal of this use case is to ingest customer interaction data from Shopify into Pinecone for semantic search in a support chatbot.

Cyclr Workflow:

- Trigger: Shopify webhook fires on new customer interaction.

- Transform: Clean/normalise interaction text.

- Enrich: Add product metadata via internal API call.

- Embed: Call external API, for example OpenAI to convert text into vector.

- Send to Pinecone: Use custom HTTP connector to upsert the vector into Pinecone.

The benefit of Cyclr is that ist acts as an orchestrator to route and prepare data for downstream AI systems.

Tips for optimizing Cyclr for deep data ingestion:

| Strategy | Why it matters |

| Use Webhooks Over Polling | Reduces latency and improves scalability |

| Batch API Calls When Possible | Avoid API rate limits and reduce overhead |

| Custom Connector Development | For destinations like Pinecone or Elasticsearch |

| Async Queuing via Webhooks | Send data to a message broker (e.g., AWS Lambda, Kafka) for decoupled processing |

| Embed Data Quality Checks | Avoid garbage-in scenarios, especially for AI |

Summary for how to use Cyclr for deep data ingestion:

| Step | Role in Ingestion |

| Connect to source APIs/webhooks | Capture new data |

| Schedule/poll/trigger workflows | Control ingestion cadence |

| Enrich and transform data | Prepare it for downstream use |

| Route to AI/analytics endpoints | Push to vector DBs, LLMs, or warehouses |

| Monitor and handle errors | Ensure reliability |

Other Use Cases for Deep Data Ingestion

Integrated RAG (Retrieval Augmented Generation) Knowledge Agent with Pinecone

A a powerful RAG Knowledge Agent that seamlessly integrates Slack, Google Drive, Pinecone (a vector database) and ChatGPT. This agent is designed to intelligently answer user queries by pulling relevant questions from a designated Slack channel, retrieving corresponding video scripts from Pinecone’s vector database, and using ChatGPT to generate contextual responses based on a tailored prompt.

We start the workflow with a collection of PDF documents, these include video transcripts and their corresponding YouTube links. We upload these files to Google Drive, where a Cyclr integration workflow picks them up.

Using OCR technology powered by Mistral AI, the content is extracted and converted into Markdown format. From there, it’s upserted into Pinecone as vectors.

Now, imagine a user posts a question in the Video Knowledge Agent Slack channel, like:

“I would like to know how to set up the Oracle NetSuite connector.”

The Knowledge Agent processes the request, searches the Pinecone vector database, and returns the most relevant video and chapter—along with a clear, detailed explanation.

We built a full tutorial for Integrated RAG Knowledge Agent with Pinecone check it out!

Streamlining Vector Operations with Pinecone

A simple workflow that streamlines operations using Pinecone. First, we’ll load a set of texts from a Google Sheet containing information about our various Application Connectors. Using the Open AI Connector, we’ll convert these texts into vector embeddings and insert them into a Pinecone vector database.

Next, we’ll take a set of questions from another Google Sheet, transform each question into a vector, and perform a similarity search against the previously stored vectors in Pinecone.

Finally, we’ll write the matched results back to the Google Sheet for reference.

In conclusion, this integration workflow can easily be adapted to a wide range of use cases. For example, you could build an HR agent to help sort and analyze resumes, or an education agent to search school catalogs and provide quick answers to student inquiries. With Cyclr’s low-code platform, you can focus on building your own custom use case—quickly and without the hassle of complex IT setup.

We built a full tutorial for Streamlining Vector Operations with Pinecone check it out!

Conclusion: Powering Intelligent Workflows with Cyclr

Cyclr may be best known as an embedded iPaaS for SaaS integrations, but it’s also a flexible and powerful tool for orchestrating deep data ingestion pipelines. From querying rich datasets across platforms to delivering enriched, AI-ready data to modern destinations like vector databases, analytics engines, and ML workflows.

By combining scheduled or real-time data extraction with smart transformation and seamless delivery, Cyclr enables SaaS teams to build intelligent, scalable workflows that drive personalization, automation, and insight. Doing so without heavy engineering lift. Whether you’re enriching support data for semantic search, syncing behavioral events to AI pipelines, or connecting your product to enterprise data ecosystems, Cyclr provides the building blocks to make it happen.

With the right connectors, thoughtful orchestration, and external API integrations, Cyclr can be the glue that brings together your ingestion, enrichment, and AI delivery strategy.

Ready to Build Smarter Data Workflows?

If you’re looking to streamline deep data ingestion, automate delivery to AI and analytics platforms, and embed powerful integrations directly into your SaaS product, Cyclr has you covered.

👉 Book a demo to see how Cyclr can power your ingestion and delivery pipelines

👉 Or start building with our visual workflow designer today

Let’s turn your data into action, in real time.