Updated on by Hayley Brown

AI features in SaaS applications are becoming synonymous with each other, and often the starting point for SaaS products is developing their own AI knowledge agent/chatbot. These facilitate connections via a Retrieval Augmented Generation (RAG) approach. RAG helps large language models (LLMs) provide more relevant responses and allows your AI model to utilise external data sources for tailored context-aware replies.

Today we will demonstrate how users can leverage Cyclr and rapidly build an AI knowledge agent. Integrating your customer data will enhance the chatbot’s usefulness and provide a much more tailored experience.

AI Knowledge Agent: Sales Tutorial

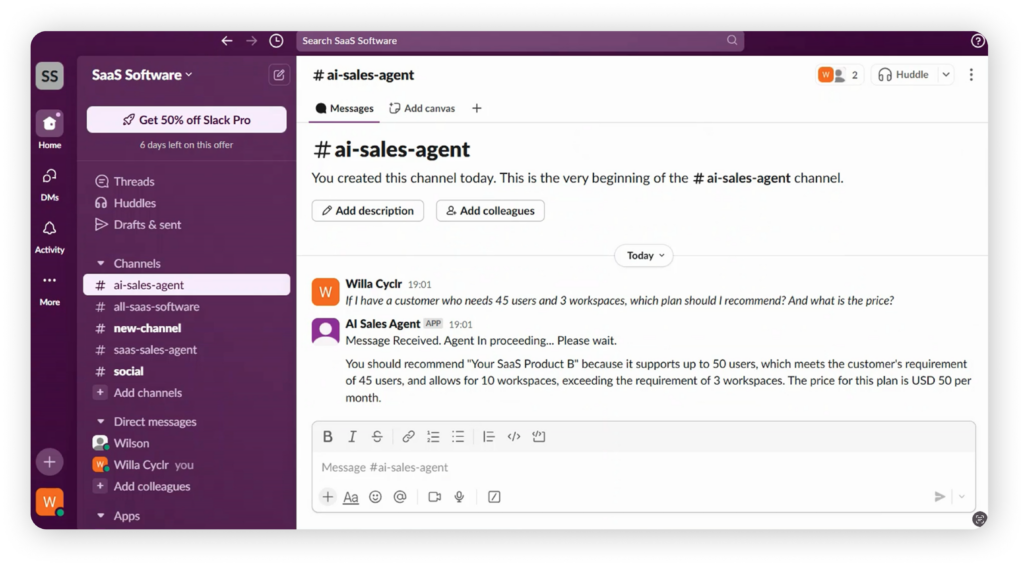

In this example, we focus on developing an AI agent to help your sales team, we use Slack, ChatGPT, Google Sheets and your own SaaS application (fictional app). A question is asked in a Slack channel and this query is followed by a response in the same channel.

What you’ll specifically learn is how to create a webhook integration with Slack and how to work with an LMM model (ChatGPT) with security control. This is just one example and this data source could be replaced with anything. For instance, Confluence created a company called Wiki. All the elements are interchangeable.

We are highlighting one way if you’re a Cyclr subscriber you can leverage the tool, alongside your customer-facing integrations, to get extra internal benefits above and beyond our embedded capabilities.

Watch the full AI Knowledge Agent Tutorial

Introduction to the AI Sales Agent

There are generally three elements that make up an AI knowledge chatbot, first the chatbot application, in this instance ChatGPT. A database, Google Sheets and a SaaS application, for this example we’ve made a fictional SaaS to replicate your SaaS.

Let’s jump into an example to see how the AI knowledge works in practice. Our Google sheet holds product plan information, configuration details and pricing for a SaaS application. The LLM model processes this data to generate accurate responses based on the query a user has sent via the SaaS knowledge agent Slack Channel.

For instance, “I need 45 users and three workspaces, which plan should I recommend?” The AI agent processes the request, searches the product knowledge database and provides the most suitable plan along with a detailed explanation.

Now let’s consider another user who does not have permission to access pricing details. If they ask the same question in the same channel, the AI agent will generate a general response without revealing any sensitive pricing information. The best part is that we can build this AI knowledge agent entirely without coding using Cyclr, an embedded integration platform.

Setting Up the Slack Connection

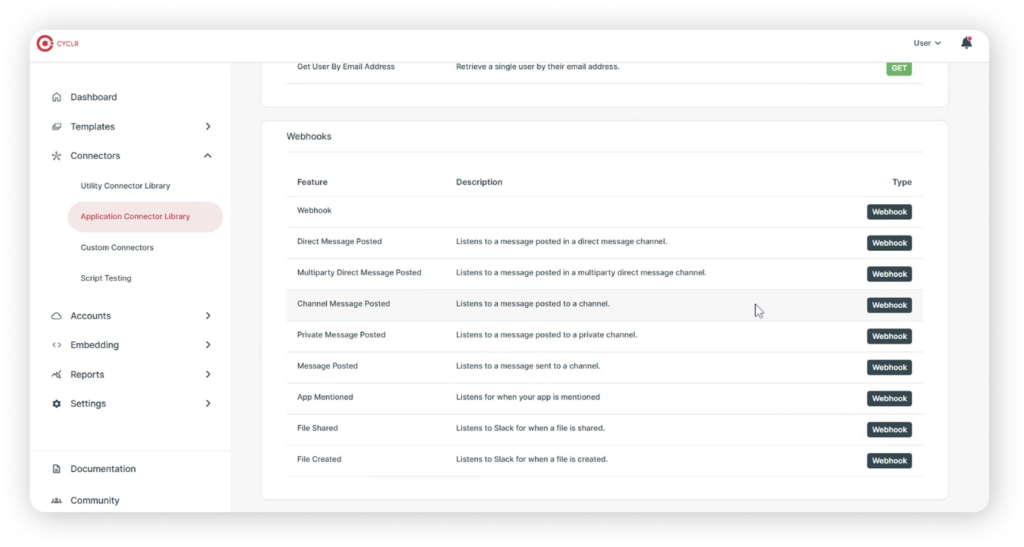

The first step is installing the Slack Connector, Cycler has already handled the heavy lifting by simplifying authentication and converting Slack’s API endpoints into ready-to-use methods. These methods can be accessed directly in Cyclr’s low-code workflow development platform.

Installing the Slack Connector is quick and straightforward. Simply enter the client ID, client secret and scopes, these can be retrieved from Slack. Should you need extra guidance we’ve created a step-by-step installation guide to walk you through the process.

Now let’s build the first integration template by setting up an event trigger that listens for messages posted in specific Slack Channels.

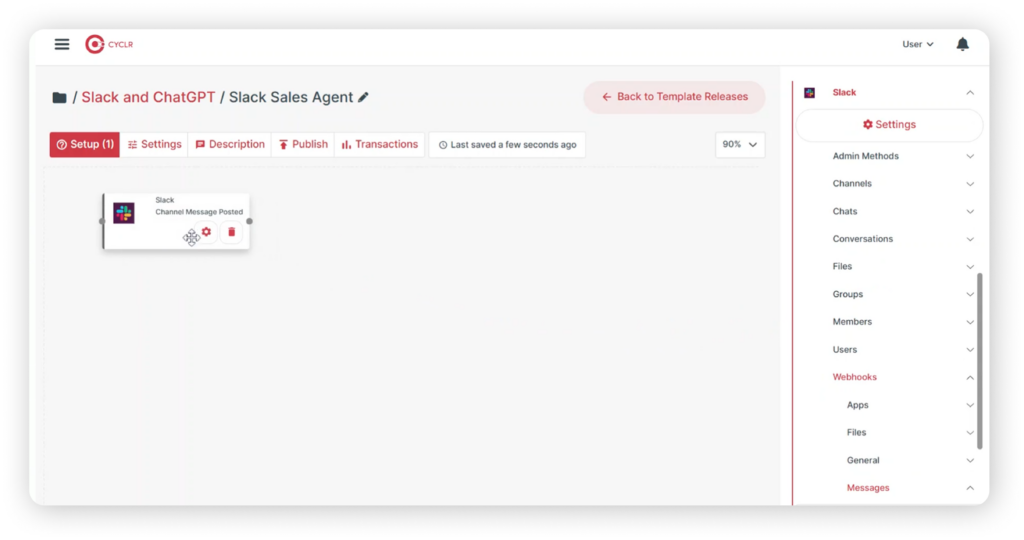

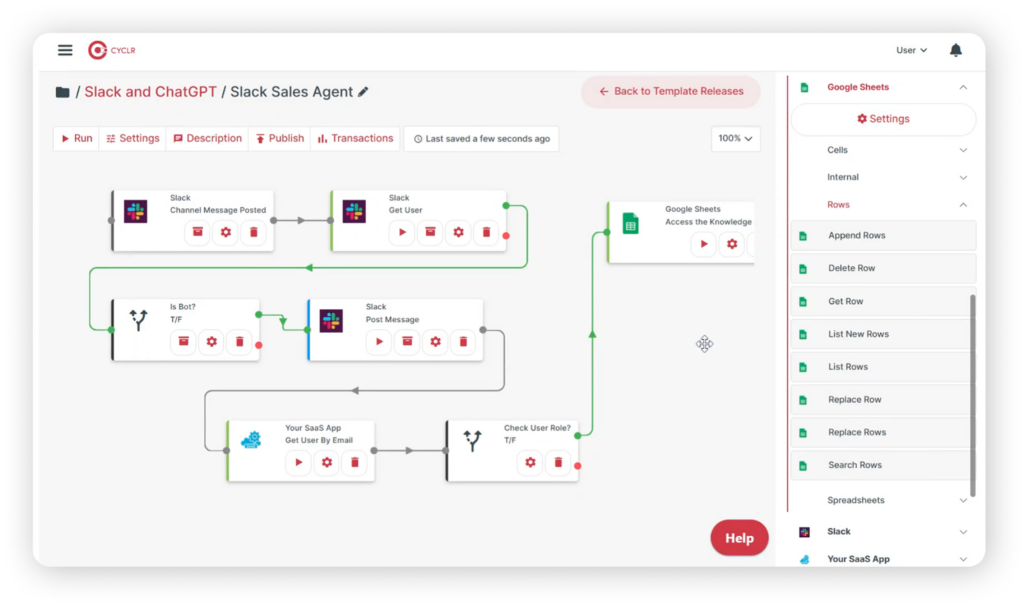

To begin, locate Slack under the webhooks category, select the Channel Message Posted method and drag it onto the empty canvas. Once added, copy the webhook URL from the method. Next, open the Event Subscriptions feature within your Slack app, enable events, and paste the copied webhook URL into the designated field under the subscribe to events on behalf of users section. Next, add the workspace event message channels with this setup. The integration is now listening for new messages posted in the specified Slack channels, allowing the AI agent to process incoming queries in real-time.

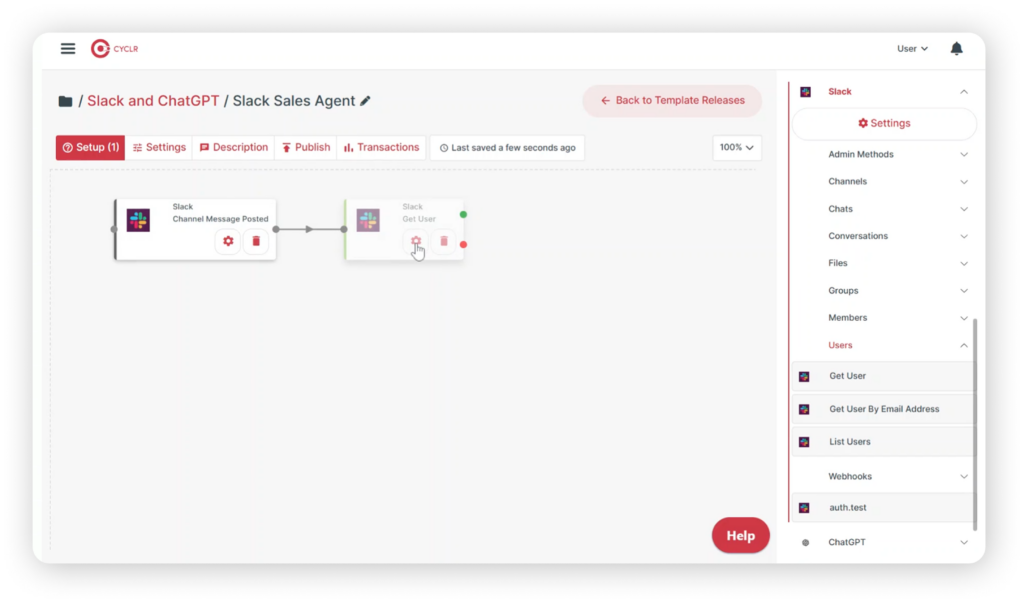

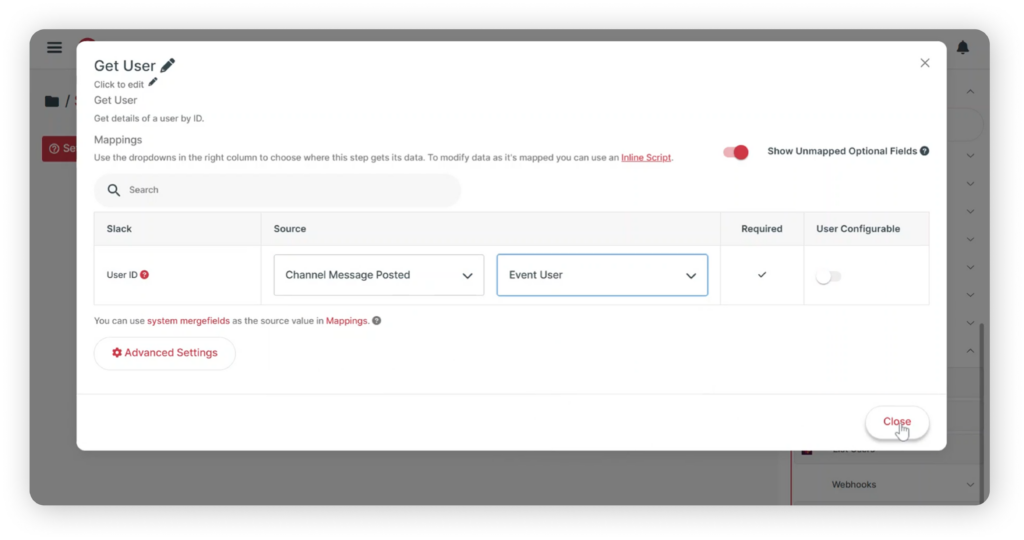

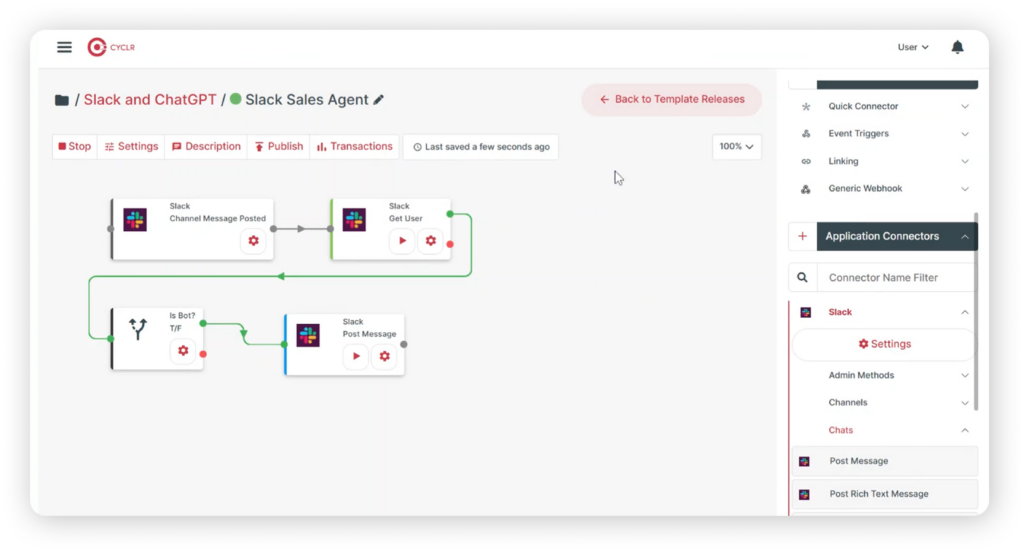

Next, we need to retrieve user information for the message sender. To do this, add the Get User method and map the relevant fields from the first step, the user ID should be sourced from the event, the user ID of the sender. Now add a decision step to check whether the message comes from a bot or a real user since we only want to create responses for real users, this step ensures that bot messages are ignored. Once that’s set up, go to the chats category and add the post message method, configure the field so that the response is sent in the same channel where the message was received. Then add a simple reply confirming that the message has been received and with this basic setup complete.

It’s time for a quick test run of the workflow. Head over to Slack and send a test message to see if the integration is working as expected.

User Role Validation & Data Retrieval

The next step in the integration is to check the user role and authorisation level to determine whether they can access the knowledge base. The workflow retrieves user information from your SaaS application using a pre-built native connector. Typically Cyclr takes care of this process for you, eliminating the need for any technical setup. Cyclr’s Connector Team ensures that your API endpoints are seamlessly converted into ready-to-use methods making integration effortless.

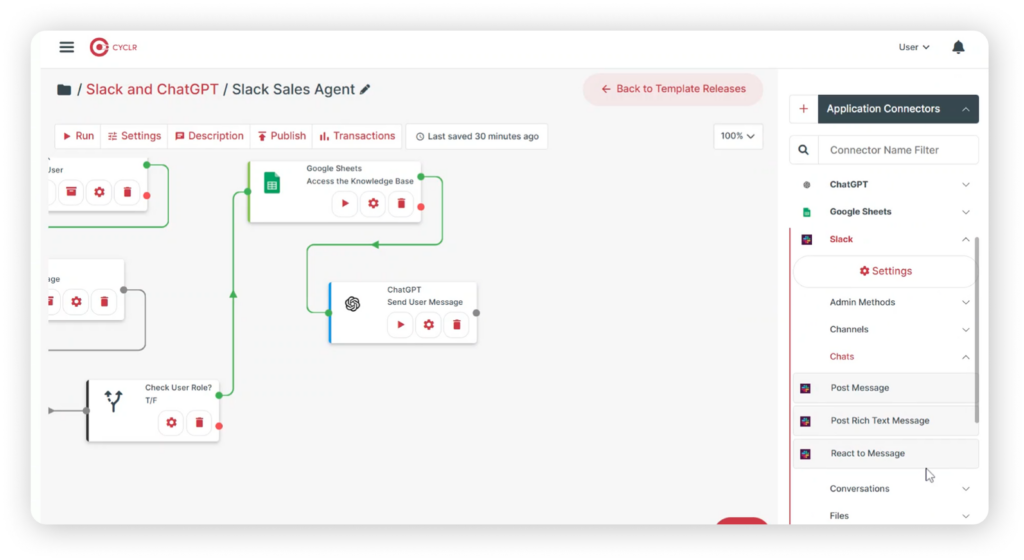

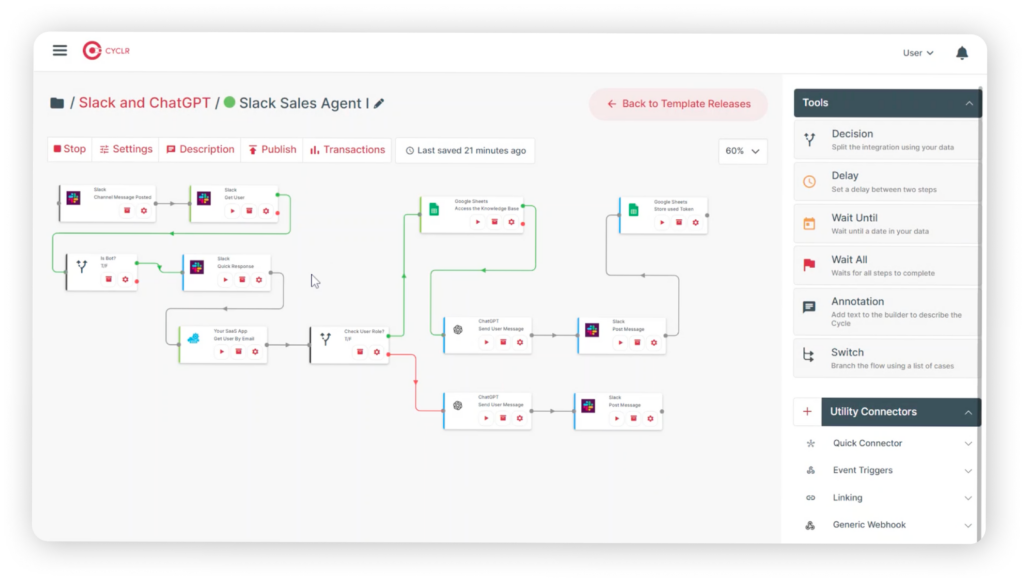

For the next step, a Decision field is required and added to the workflow. In this example, if the user role type equals one, the user is authorised to access the knowledge base. The Decision step can also be made more advanced using tools like Switch to handle multiple conditions at once. When the authorisation is confirmed, the workflow follows the green route, it then connects to Google Sheets, retrieves the relevant data rows and provides this information to the LLM model to generate an appropriate response.

The data source isn’t limited to Google Sheets. It could also be PDF files in Google Drive and documents in Dropbox. It could even be a database within Cyclr’s extensive Connector library of 600+ SaaS applications, Integrating with different data sources is seamless and efficient, just like in this example, you can simply drag and drop to configure the Sheet ID and sheet name you want to connect with, making the setup quick and effortless.

Connecting ChatGPT for AI-Powered Responses

Now let’s move to the core of the workflow, connecting to the LLM model. In this case, we’ll integrate with ChatGPT. To do this, simply select the Send User Message method under the ChatGPT connector. Next configure a few basic settings, such as choosing the model type. In this case, we’ll use GPT 4 for the prompt message, we’ll combine static text with dynamic information captured in the previous steps. This includes the user’s question from Slack and the data summary retrieved from Google Sheets.

By structuring the prompt this way, we ensure the LLM model generates accurate and context-aware responses. Once we receive the response from the LLM model, we can send it back to the user through Slack. To do this, use the post-message method to configure the channel and source the text from the LLM result. This ensures the user receives the appropriate answer in the Slack channel they used to ask the question.

Testing, Deployment & Expanding Capabilities

So, how would this workflow look if the user doesn’t have authorisation to access the knowledge base? If the user doesn’t have authorisation the workflow will skip the Google Sheets connection. Instead, it will directly reach the LLM model, and the system will respond to the user based on the available information, and that’s it. The workflow building is complete.

You have successfully created an AI Knowledge Agent with no coding required. This demonstrates how easily you can leverage Cyclr to enhance your capabilities with different AI tools.

We hope this has sparked some ideas for you and how an embedded integration platform can expand your SaaS capabilities. If you have any questions or need assistance, don’t hesitate to reach out.

We regularly share integration tutorials, news and educational videos on our YouTube channel.

Check out our videos and learn how integration can expand your SaaS capabilities.